Reductionism

Oxytocin is the cuddle hormone – it is what makes us feel affectionate.

So where is love in all this?

Is this chemical all that there is to this powerful emotion?

Adapted from an image of the oxytocin molecule in Wikimedia Commons

Edgar181 – Accessed 8 Sept. 2015

Introduction – Reductionism

Doesn’t biology, when all is said and done, really boil down to just physics and chemistry?

What are we to make of an assertion like this?

Is it correct . . . or is it just playing with words? Is there something special and unique about biology that cannot be expressed in a simple physicochemical way? If it is true, then will we, in the future, find ourselves replacing biological language with physicochemical language? If you think this is unlikely, then what are the problems or misconceptions that will prevent it from happening?

The questions outlined above relate to one of the most vexed questions in science today – the relationship between the various scientific disciplines: what characteristics do they share, and what makes each distinct? Are some disciplines more scientific than others? What are the differences in principles and methodology between, on the one hand, the so-called hard sciences like physics and chemistry and, on the other, the soft or special sciences like biology, sociology, politics, and economics? Is there a foundational subject or subjects? If biological explanations are ‘improved’ by being ‘broken down’ (explained or analyzed) in terms of physics and chemistry (reductionism), then can explanations be equally improved by being ‘built up’ into greater wholes?

The problem

The problem of reductionism is notoriously complicated because it touches on so many concerns in the philosophy of science. Among the major topics are: the relationship between physics and the rest of science including debates concerning the difference between ‘hard’ and ‘soft’ science, and between physics and the special sciences. What exactly, is the difference (if any) between physics and biology, and between the brain as physico-chemical processes and the mind or consciousness.

A brief scanning of the literature on reductionism quickly reveals its complexity as it hits up against a whole lexicon of daunting specialist terms, all warning you of the minefield ahead: constructivism, emergence, foundationalism, holism, organicism, vitalism, eliminativism, supervenience, multiple realization, epiphenomena, teleology, qualia, degeneracy, consilience, granularity, realism and anti-realism, perspectivism, and much more . . . all seemingly mixed up into a scientific and philosophical soup of ideas.

Reductionism lies at the heart of two competing notions or paradigms concerning the way we should be doing science based on different metaphysical systems . . . different assumptions about the nature of reality itself. The two paradigms are not distinct, being related in complex ways. However, for ease of exposition they can be contrasted as, on the one hand, reductionism (foundationalism) and, on the other hand, holism (emergentism).

As understood here:

Reductionism emphasizes: the unity of science; the ideal of mathematics; the foundation of science in the laws, theories and concepts of physics; and the primacy of analysis as a mode of explanation – the understanding of scientific entities in terms of the operation of their parts.

Holism challenges the notion of a unified science, advocates anti-foundationalism by asserting the validity of independent domains of discourse, and the equivalent use and validity of synthesis as a means of scientific explanation – the understanding of scientific entities in terms of their relationship to more encompassing wholes.

The question being posed is whether biology . . . differs in its subject-matter, conceptual framework and methodology from the physical sciences’.[12]

We may be suspicious of reductionist claims but rarely are they subject to close scrutiny by practicing biologists. In this article I shall try to draw some of the threads of this vexed problem together. For simplicity, and to challenge the reader, the article will develop an overall claim based on a series of challengeable principles.

The reductionist challenge

One way of loosely circumscribing reductionism is to regard it as the translation of ideas from one domain of knowledge to another. In this form it is often used as a way of simplifying, debunking, or explaining away.

Reductionist claims usually take the form ‘A is really just B’ or ‘A is nothing but B’. A well-known example would be the statement ‘humans are really just DNA’s way of making more DNA’. Is this a serious, valid, and useful scientific claim?

After being confronted by a reductionist claim of this sort we are left wondering whether we have been cheated – thinking that something important, even critical, has been ignored or passed over – but not knowing what that is.

There is the implication that some subjects, language, or ideas are superfluous, that they can be eliminated altogether or explained and understood in a scientifically more respectable way.

Examples

Actual examples of reduction in the history of science are quite rare but there is the move from classical thermodynamics to statistical mechanics; the transition from physical optics to Maxwell’s electromagnetic theory whose equations have resulted in smartphones and TVs; and the transition from Newtonian mechanics to Einsteinian relativistic mechanics. Maybe in biology there is the translation of Mendel’s gene theory into the biochemistry of DNA. But when do we know that such a task has been completed successfully?

One famous attempt at reduction was that of English philosophers Alfred North Whitehead and Bertrand Russell who, in Principia Mathematica (1910, 1912, 1913, & 2nd edn 1927), examined the foundations of mathematics. By using axioms and inference rules they tried, unsuccessfully, to explain mathematics purely in terms of logic and set theory.

To understand the provocative flavour of reductionism here are some further examples . . .

It might be claimed, for instance, that history is just a fancy name for what is really only biology – the study of human behaviour; that morality is just a set of functional biological adaptations; that concepts and mental images in our minds are just physicochemical processes in our brains; or that sociology is a fiction because there is no such thing as ‘society’ just groups of individuals.

Various forms of reduction have become ‘-isms’: like psychologism – the claim that many aspects of our behaviour can be explained in purely psychological terms; biological determinism – that human behaviour can be explained in purely biological terms; environmental determinism – that social development is largely a consequence of environmental factors; genetic determinism – that genes determine our behaviour more than culture.

In the humanities similar cases, perhaps slightly different in character, might be for example a historical or literary analysis from the perspective of psychoanalysis or Marxist theory.

Of special current relevance is the way that the mind reduces to the brain, the mental to the neural, and the neural to the physico-chemical.One articulate contemporary statement of scientific reductionism in general is that of Alex Rosenberg, Professor of Philosophy at Duke University in America. In The Atheist’s Guide to Reality: Enjoying Life without Illusions (2011) Rosenberg poses his thesis with an uncompromising directness. He is an advocate of scientism[1] and the claim that ‘science alone gives us genuine knowledge of reality‘, that ‘What ultimately exist are just fermions and bosons and the physical laws that describe the way these particles and that of the larger objects made up of them behave‘, that ‘the physical facts fix all the facts‘ in a ‘purposeless and meaningless world‘. Further, since ‘morality is illusory‘, the consistent atheist must be a nihilist, ‘albeit a nice one‘.

Rosenberg’s view may be characterized as an extreme variant of materialism[5] or physicalism[2] within the host of philosophical positions that can be adopted on such matters.[3][4][5][6][7][8][9] However, this is a particularly hard-nosed approach[10] when compared to naturalism[3] and scientific realism[4] which have a more relaxed attitude to the claims of science.

Rosenberg’s stance is that of physicalist reductionism[12] and it provides a useful target for those with different views.

Unpacking ‘reduction’

Already you may be thinking that these examples are just a matter of woolly thinking, oversimplifications, unreasoned comparisons, or semantic confusions . . . so let’s be more specific.

To get started we need to establish a common understanding, some ground rules. What exactly do we mean by ‘reduction’? What is being reduced when we suggest reducing X to Y . . . are we talking about one, several, or all of the following: properties, terms, concepts, physical objects or phenomena, explanations, meanings, theories, principles, and laws?

Philosophers have found a way of simplifying this problem by distinguishing three kinds of reduction as it relates to: existence, explanation and methodology. This provides us with a broad classification of, on the one hand, the objects of reduction and, on the other, what is being claimed for a reduction.

EXISTENCE – what exists

EXPLANATION – how we can claim that reduction has been achieved

METHODOLOGY – how the reduction is carried out

This distinction will be needed in the discussions to come as it helps to clarify any disagreements about reductionist claims.

Principle 1 – when encountering a reductionist claim it helps to distinguish whether the claim is about existence, explanation, or methodology

Existence

These are reductionist (metaphysical) claims aims about what ‘really’ exists by reducing entities or phenomena to others – often relating to modes of representation and the distinction between ‘appearance’ and ‘reality’. For example, it may be claimed that that a table or chair, though seeming to be a solid unitary object, is ‘really’ made up of molecules that consist mostly of space (ontological reduction)

Explanation

These are reductionist claims about our ways of knowing, understanding, and explaining – such as the reduction of one theory to another. For example, that it is best to explain genes in terms of molecular biology (epistemological reduction).

Methodology

This relates to the problems of translating one form of knowledge into another: chemistry into physics, biology into chemistry and so on. For example, what exactly are the factors complicating the translation of words like ‘mitosis’, ‘predator’, ‘hibernation’, and ‘interest rates’ into physicochemical language (methodological reduction)?

Five Faces of reduction

I shall now ask you, the reader, to examine a set of challengeable principles that can be used to assess reductionist claims. The principles are organized around five topics that are key ingredients in the confusions and problems relating to reductionism:

1. The representation of reality in perception, cognition, and language

2. Explanation & causation

3. Scientific fundamentalism – that there is a unity of science based on a foundation of mathematics, physics, universal physical laws and constants, and fundamental particles

4. Emergentism (holism) – wholes, parts, and emergent properties

5. Domains of knowledge and the translation of ideas from one domain of knowledge into those of another

1. Reality & representation

The first topic looks at the ambiguities and confusions that can arise from the way we intuitively structure reality, the way our perception and cognition filters all our experience to give us a uniquely human outlook on reality. Also the way scientific language can conceal errors and ambiguities in the way we describe and represent the world.

2. Explanation & causation

Causation is the glue that we use to bind our scientific explanations and it is, we believe, the bedrock on which science builds its observations and predictions. And yet causation is problematic to scientists and philosophers alike.

3. Foundationalism

This article examines the claim that there is a unity of science based on the foundations of physics and mathematics, that ‘physics fixes all the facts’. That, at least in principle, everything in the universe can be explained in terms of the fundamental constituents of matter their relations – including normativity, function, purpose, mind, meaning, thoughts and representations. The actual foundations may be treated in terms of matter (the foundational physics of fundamental particles out of which all matter is made) or explanatory axioms (the physical laws that underpin the order of the cosmos).

4. Wholes and parts

This article examines the challenge to foundationalism, that wholes are in some sense more than an aggregation of parts, and that novelty has emerged in the universe in an unpredictable way by giving rise to new and unexpected features and properties – like the emergence of life from inanimate matter, and consciousness from brains.

5. Domains of knowledge

Sciences tends to arrange its subdisciplines in a sequence that runs from mathematics and logic to physics, chemistry, biology, behaviour and psychology, then the economic, social and political sciences. Is the segregation of scientific knowledge into these domains just a matter of convenience or does it relate in some way to the structure of the world? Related to this question there is the way that each scientific discipline has developed its own particular language, principles, practices, and academic empires. How are these domains of knowledge to communicate with one-another? Is it possible to translate one discipline into another?

A preliminary thought to ponder: the challenge for science and philosophy in the 21st century is not just to devise a physical account of the material universe in the form of some kind of unified field theory of space-time, M-theory, or string theory, but to provide an intellectually coherent account of the world that encompasses, among (many) other things, matter, life, the mind, consciousness, normativity, function and purpose, information, meaning, and representation.

1. Reality & representation

Whether something can be ‘reduced’ to something else depends largely on our intuitions about what there ‘is’ . . . about the nature of the objects in existence or, at least, the way we represent them in scientific theories, laws etc. This is a complex topic addressed in the article on representation.

2. Explanation & causation

Causation underlies the workings of the universe and our discourse about it. Anyone who is curious about the natural world must at some time or another in their lives have wondered about the true nature of causation, especially those people with a scientific curiosity. This series of articles on causation became necessary, not only for these reasons, but because causation is so frequently called on to do work in the philosophical debate about reductionism and today’s competing scientific world views.

It is dubious whether the reduction of causal relations to non-causal features has been achieved and scientific accounts are strong alternatives with revisionary non-eliminative accounts finding favour. Can emergent entities play a causa role in the worlds? But is causation confined to the physical realm?

The issue to be addressed here is, firstly, can causation itself be reduced to something simpler. But the role that causation plays in causal interactions that operate within and between domains of knowedge. The outline of this article follows the account given by Humphreys in the Oxford Handbook of Causation of 2009.[2]

At the outset it is important to distinguish between reduction between the objects of investigation themselves (ontological reduction) and linguistic or conceptual reduction as the reduction of our representations of those objects.

Reduction of causation itself

Eliminative reduction of causation

We must decide whether causation is itself amenable to reductive treatment. Reduction may be eliminative reduction in which the reduced entity is considered dispensable because inaccessible (Hume’s claim that we do not experience causal connection) so we can therefore eliminate it from our theoretical discourse and/or real objects (ontology) (the Mill/Ramsay/Lewis model) substituting phenomena that are more amenable to direct empirical inspection. The most popular theory of this kind is Humean lawlike regularity but in this group would be the logical positivists, logical empiricists (e.g. Ernest Nagel, Carl Hempel), Bertrand Russell, and many contemporary physicalists with an empiricist epistemology. Hume’s view was that we arrive at cause through the habit of association and in this way he removed causal necessity from the world by giving it a psychological foundation. A benign expression of this view would be that ‘C caused E when from initial conditions A described using law-like statements it can be deduced that E’.

Non-eliminative reduction of causation

Causation is so central to everyday explanation, scientific experiment, and action that many have adopted a non-eliminative position. X is reduced to Y but not eliminated, simply expressed in different concepts like probabilities, interventions, or lawlike regularities. Non-eliminativists like the late Australian philosopher David Armstrong hold that causation is essentially a primitive concept that we can at least sometimes access epistemically as contingent relations of nomic necessity among universals and thus amenable to multiple realization. language or with eliminativist accounts explaining causation in non-causal terms.

Revisionary reduction of causation

Here the reduced concept is modified somewhat, as when folk causation is replaced by scientific causation. Most philosophical and self-conscious accounts of causation are revisionary to a greater or lesser degree.

Circularity

Many accounts of causation include reference to causation-like factors as occurs with natural necessity, counterfactual conditionals, and dispositions in what has become known as the modal circle. The fact that no fully satisfactory account of causation can totally eliminate the notion of cause itself is support for a primitivist case.

Domains of reduction

Discussions in both science and philosophy refer to ‘levels’ or ‘scales’ or ‘domains’ of both objects and discourse. So physics is overtopped by progressively more complex or inclusive layers of reality such as chemistry, biochemistry, biology, sociology etc. This hierarchically stratified characterization of reality is discussed elsewhere. Here the task is to examine the way causation might operate within and between these different objects and and domains of discourse.

The attempt at reducoing one domain to another is not a straightforward translation as an account must be given of the different objects, terms, theories, laws, properties and their role in causal processes. The preferred theory of causation (whether, say, a singularist or regularity theory) will be pertinent to what kind of causal reduction may be possible.

Relations between domains

Suppose we are engaged in the reduction of a biological process to one in physics and chemistry, say the reduction of Mendelian genetics to biochemistry, then what kinds of causal interactions might we invoke? The causal relation might be: a relation of identity; an explicit definition; an implicit definition via a theory; a contingent statement of a lawlike connection; a elation of natural or metaphysical necessitation as in supervenience; an explanatory relation; a relation of emergence; a realization relation; a relation of constitution; even causation itself. If indeed the causation were different in different domains then this might render reduction restricted or impossible. Accounts like counterfactual analysis are domain independent.(p. 636)

However, there are domain-specific claims such as physicalism’s Humean supervenience. Under some theories causation is restricted to physical causation as the transfer of conserved physical quantities and this is difficult to apply to the social sciences.

Domain-specific causation & physicalism

Could it be that causation in biology is different from that in physics or sociology or is causation of the same general kind – is their ‘social cause’ and ‘biological cause’ or just ’cause’? The most contentious area here is mental causation where intentionality is often treated as ‘agency’ rather than ‘event’ causation.

Supervenience

In the 1960s domain reduction was promoted through the reduction of theories via bridging laws (Ernest Nagel). One major challenge for such an approach has been multiple realization whereby something like ‘pain’ can be expressed physically in so many ways that this renders its further reduction unlikely although this has been countered by supervenience accounts. For example Humean supervenience regards the world as the spatio-temporal distribution of locaized physical particulars with everything else including laws of nature and causal relations supervening on this.(p. 639) Supervenience is generally regarded a a non-reductive relation.

Functionalism

Multiple realization characterizes properties in terms of their causal roles. Money is causally realized by coins, cheques, promissory note etc. The role of ‘doorstop’ can be functionally and reducibly defined so not all cases of multiple realization are irreducible, irreducibility needs to be taken case by case. For Kim (1997;1999) ‘Functionalization of a property is both necessary and sufficient for reduction …. it explains why reducible properties are predictable and explainable’. Since almost all properties can be functionalized few need to be candidates for emergent properties (p. 644)

Upward & downward causation

The restriction of cause to physical domains is supported by the downward causation and exclusion argument.

Causal exclusion principle & non-reductive physicalism

The causal exclusion principle states that there cannot be more than one sufficient cause for an effect. If we accept this then how are we to account for the causes we allocate at large scales, say the cause of a rise in interest rates? What is the causal relevance of multiply realizable or functional properties (redness, pain, and mental properties)? Does this principle automatically devolve into smallism, that we ultimately explain everything all the way down to leptons and bosons, or smaller and more basic entities when we find them because they are the ones doing the causal work? How can a macro situation have causal relevance if it can be fully accounted for at the micro scale. These properties then become epiphenomena, a by-product or phenomenon with no physical basis.

If C is causally sufficient for E then any other event D is causally irrelevant. Every physical event E has a physical event C causally sufficient for E. If event D supervenes on C then D is distinct from C.

There is increasing evidence supporting the causal autonomy of disciplinary discourse or non-reductive physicalism. Properties in the special sciences are not identical to physical properties since they are multiply realized although they do supervene on (instances of) physical properties since changes in the special properties entail changes in the physical properties further the special properties are causes and effects of other special properties.

A large-scale cause can exclude a small-scale cause. Pain might cause screaming while there is no equivalent neural property. This occurs when the trigger is extrinsic to the system. The pain resulting from a pin prick is initiated by the pin; it cannot possibly be initiated at the neural scale.

The exclusion principle can be applied to any kind of event that supervenes on physical events and shpows that there is no clear causal role for supervening events.

The main questions to be addressed in relation to causation and reduction are: can causation itself be reduced; is there a base-level physicochemical causation underlying all other forms of causation; how does causation operate within a. non-physicochemical domains of discourse and scales and b. between non-physicochemical domains of discourse and scales.

In posing these questions it should be noted that it is cutomary to discuss different academic disciplines, as different domains of knowledge that use their own specific terminology, theories and principles. So for example we have physics, chemistry, biology, and sociology being refereed to as ‘domains of discourse’ and stratified or into ‘levels’ or ‘scales’ of existence. From the outset a careful distinction must be made between ontological reduction, the reductive relations between objects themselves, and linguistic or conceptual reduction which deals with our representations of these objects.

Cause & reductionism

So far in discussing reductionism it has been noted that at present we explain the world scientifically using several scales or perspectives. These scales correspond approximately to particular specialised academic disciplines with their own objects of study including their terminologies, theories, and principles. One possible way of expressing this would be: matter, energy, motion, and force (physics), living organisms (biology), behaviour (psychology), and society (sociology, politics, economics). Each discipline has its own specialist objects of study like be quarks (physics), lungs (biology), desires (psychology), and interest rates (economics). Since it has been argued that each disciplines is addressing the same physical reality from different perspectives or scales the question arises as to the causal relationships between these various objects of study. This raises the question about the relationship between causes at different scales, perspectives, or, in the old terminology, ‘levels of organisation’ when they deal with different entities. How do we reconcile causation at the fundamental particle scale with causation at the political scale assuming the physical reality that they are dealing with is the same?

To answer this question we need to do some groundwork … our modest philosophical program is to ask: What is causation and in what sense does it exist? Is it something that exists independently of us and, if not, in what way does in depend on us? Is causation part of the human-centred Manifest Image? What role does causation play in our reasoning? In other words we need to demonstrate that causation is either a fundamental fact of the universe, or some kind of mental construct, or it can be explained in different and simpler terms.

If we assume the process of explanation proceeding by analysis or synthesis and we regard fermions and bosons as the smallest units of matter then causation must act primarily from the wider context. A rise in interest rates, or the pumping of a heart cannot be initiated by fermions and bosons themselves. To make sense of the fermions and bosons that exist in a heart we must consider their wider context.

Does causation occurs at all scales depending on its initiators or is there a privileged foundational with macroscales explained by microscales, that genes coding (in humans about 25,000 genes and 100,000 proteins) for proteins, cells, tissues, organs, and the organism. That is, a causal chain that leads to progressively larger, more inclusive, and complex structures. This is the central dogma of genetic determinism. But does causation occur between cells, organs, or tissues? Are genes triggered by transcription factors that turn them on and off. Is the environment causal from outside the organism along with other constraining factors at all scales. Homeostasis. Evolution occurs through changes in the genotype that are produced by selection of the phenotype as natural selecrtion expresses the organism-environment continuum.

If ‘levels’ or ‘scales’ do not exist as separate physical objects then there is only one fundamental mode of being. This is simply one physical reality that can be interpreted or explained in different ways: it has no foundationalscale or level.

Weak emergence: descriptions at scale X are shorthand for those at scale Y; strong when X cannot be given for Y.

Universal laws apply to biology, an unsupported elephant will fall to the ground, but biology has its own causal regularities that are, of their very nature, restricted to living organisms.

A cause can be sufficient for its effect but not necessary (a piece of glass C starting a fire E) – we can infer E from C but not vice-versa; it may be necessary but not sufficient (presence of oxygen C in a fire-prone region E) – we can infer E from C but not vice-versa. Under this characterization cause can be defined as either sufficient conditions (or even necesary and sufficient conditions).

Some scales of explanation or causal description are more appropriate than others. It is possible to provide an explanation that is either overly general or overly detailed. What is appropriate depends on the causal structure, what would provide the most effective terms and structures for empirical investigation. This contrasts with the view that there is a fundamental or foundational scale at which explanation is most complete. (Woodward 2009). Causes need to be appropriate to their effects. Bosons nfluencing interest rates. Interest rates affecting the configuration of sub-atomic particles. Fine-grained explanations may be more stable but not always. (Woodward 2009).

One are where this tension expresses itself is in the argument over the mechanism of biological selection in evolution. Should we regard natural selection as ultimately and inevitably a consequence of what is going on in the genes (see Richard Dawkins book The Selfish Gene) or are there causal influences that operate between cells, between tissues, between individuals, between populations, and in relation to causes generated by the environment?

Noble, D. 2012. A Theory of Biological Relativity. Interface Focus 2: 55-64.

It is widely assumed that large-scale causes can be reduced to small-scale causes, the macro to micro: that macro causation frequently (but not always) falls under micro laws of nature. This presupposes a means of correlating the relata at the different scales. This might be interpreted as microdeterminism, the claim that the macro world is a consequence of the micro world. The causal order of the macro world emerges out of the causal order of the micro world. A strict interpretation might be that a macro causal relation exists between two events when there are micro descriptions of the events instantiating a physical law of nature and a more relaxed version that there are causal relations between events that supervene. It might also be the case that even if there is causal sufficiency and completeness the existence of necessitating lawful microdeterminism (laws) does not entail causal completeness. Perhaps in some cases there is counterfactual dependence at the macro but not the micro scale.

Granularity & reductionism

We are tempted to think that we can improve on the precision of causal explanations. Could or should we try to improve the precision of of causal explanations by giving more detail or being more scientific? For example I might explain how driving over a dog was related to my personal psychology, the biochemical activity going on in my brain, the politics of the suburb where the accident occurred and so on. That is, the explanation could be given using language and concepts taken from different domains of knowledge: psychology, politics, sociology, biochemistry and so on. The same situation can be described using different domains of knowledge, scales of existence, and so on. What is of special interest is that the cause will be different depending on the perspective chosen. For simplicity the choice of detail chosen for the explanation is referred to as its granularity. This raises the problems of reduction that is discussed elsewhere. Is there a foundational or more informative scale or terminology that can be used? Is an explanation taken to the smallest possible physical scale the best explanation? Are the causal relations dependant on more metaphysically basic facts like fundamental laws? Do facts about organisms beneficially reduce to biochemical facts … and so on. Is fine grain the best?

Principle 3 – Any description of causation presents the metaphysical challenge of selecting the grain of the terms and conditions to be employed

We can appear to express the same cause using different terms that seem to alter the meaning and therefore the causal relations under consideration, for example: we might replace ‘The match caused the fire’ with ‘Friction acting on phosphorus produced a flame that caused the fire’. This raises the question ‘But what was really the cause?’ with the potential for seemingly different answers when we want only one. The depth of detail in terminology is sometimes referred to as granularity and it raises the question of whether some explanations are more basic or fundamental that others, that some statements can be beneficially reduced to others (reductionism).

This gives us an extended definition of science: science studies the order of the world by investigating causal processes. Causal processes are of many kinds: there are, we might say for example, that Though contentious we might add that we must resist the temptation to reduce causes of one kind to causes of another kind. Causally it makes no sense to reduce biology to physics by saying that fermions and bosons cause the heart to beat. A heart might consist of fermions and bosons but these do not have causal efficacy in this sense. This takes us away from the traditional method of attempting to define science which has been in terms of its methodology (the hypothetico-deductive or deductive-nomological method).

Multiple realization

Physicalists can be divided into two camps: those that think everything can be reduced to physics (reductive physicalists) and those that do not (nonreductive physicalists). The reductionist physicalist claims a type-identity thesis such that, for example, mental properties like feelings are identical with physical properties: that all mental properties are caused by physical properties. Assuming we have two entities, one acting causally on the other seems mistaken the two being, in fact, one and the same. Similarly the non causal connection between temperature and mean molecular kinetic energy. Also life and complex biochemistry? The question arises though as to the identity of objects. Is pain physically identical in a human and a herring? Here it seems that pain can be expressed in many different physical ways, known as ‘multiple realization’. This attack on the type-identity thesis led to the modified claim that mental states are identifiable with functional states which then allows multiple realization, a functional property being understood in terms of the causal role it plays. However, we can think of pain as being either coarse-grained, or fine-grained. ??Either one thing, a mix of properties hardly warranting aggregation under a single category, or OK.

Emergence

Reduction is generally contrasted with emergence. Acounts of emergence are rarely causal in form. Why cannot ‘horizontal’ causation give rise to emergent features within the same domain?

3. Scientific fundamentalism

It might be assumed that science provides us with the most secure form of knowledge and that, within science, the most secure forms of knowledge are mathematics and physics. But why is this so?

The explanatory regress

We explain one ‘thing’ in terms of another – we do not explain it in terms of itself. Reductionism, like all science, is a form of explanation: it gives a clarification, simplification, reasons, or justification. And it does so by explaining the whole in terms of its parts.

Justification

Aristotle observed that explanations, to be logically consistent, require further explanation. Like a child, we can continue to ask ‘but why?’, demanding yet more explanations as justification.

In practice, at some stage in the explanatory process we accept one particular explanation as sufficient for our purposes – but that does not mean that, logically, the demand for further explanation cannot continue.

Fundamentalism

Explanations, like philosophical justification, can enter an infinite regress or lapse into circularity. The only way out of this dilemma is to draw a line in the sand, to accept one particular explanation as sufficient for purpose, and then use this as a point of security or foundation for further inferences.

This fundamentalism can then serve as an unquestioned bedrock of self-evident or unjustified truth or axiom (sometimes called a primitive or brute fact). A good example of a scientific brute fact is a law of physics.

We feel a compulsion to be as fundamental as possible in our explanations: if further questions can be posed then the problem has not been adequately addressed. Scientific explanation seems to stop at physical constants and laws – even though we cannot explain why these laws are as they are or, indeed, why there are any laws at all. Mathematics is the cardinal case of theories and explanations built on axioms.

Coherentism

An alternative to fundamentalism is coherentism whereby beliefs must hang together, forming a coherent web of interlocked ideas.

Semantics, metaphor, definition

Much turns on what we assume is meant by ‘foundational, or ‘fundamental’ and our mental characterization of ‘reduction’.

Fundamental

We have seen that one way of bringing a regress to a halt is to find an explanation that does not need justification – one that is beyond question, primitive, self-evident, or a brute fact. It then becomes futile looking for further definitions, explanations or proofs because such foundational concepts presuppose the things they are meant to be explaining.

In mathematics these basic assumptions are known as axioms and they form the foundational logical structure on which all mathematics rests: if the axioms are unreliable, then the entire edifice comes crashing down.

This is the mode of thinking that we can call scientific fundamentalism. Aristotle used this principle to underpin his logic of scientific demonstration – the famous deductive syllogism. This was a form of argument which first stated a universally secure foundational principle, then declares a particular instance, such that the premise necessarily entails the conclusion (e.g. All swans are white (foundational or universal principle), this is a swan (particular instance), therefore this swan is white).

(e.g. This is a swan, all observed swans have been white, therefore this swan is probably white). The conclusion of a deductive argument appears certain while that of an inductive argument has degrees of probability that depend on the quality of evidence.

Principle 2 – Foundationalism – is the search for secure assertions that can be taken as the underpinning for other statements and assertions

The overwhelming character of foundationalism or fundamentalism is that of ranked dependency: some entities only exist, have authenticity, or can be explained because of others. They are diminished in relation to something else of greater significance.

Principle 3 – foundationalism or fundamentalism are relations of dependency – where one object depends on, or is subordinate to, the existence, explantion, or method of investigation, of another

Reduction

How do we represent ‘reduction’ in our mind’s eye? There are two objects: the reducee (that which is reduced) and reducer (that to which it is reduced). The word is a metaphor derived from the Latin reducere to bring back, to be assimilated by, or to diminish. We imagine the reducer as in some way prior to, or more basic than the reducee. Sometimes this is treated as a process of elimination (eliminativism) as when we regard the description of mental illness (reducer) as eliminating or substituting for possession by demons (reducee), or the idea of oxygen (reducer) replacing that of phlogiston (reducee).

Whether the reducee is eliminated, subsumed, or replaced by the reducer, a prioritization or ranking has taken place: the reducer has been prioritized over the reducee (for whatever reason). Ranking and prioritization are characteristics of our minds, not of nature so whenever we perform a ‘reduction’ we need to determine whether we are assuming that the reduction occurs in nature or in our minds.

Principle 4 – ‘reduction’ is metaphorical language used fore the prioritization or ranking of something in relation to something else. It occurs in our minds, not in nature

Maths & physics

‘All science is either physics or stamp collecting’

Ernest Rutherford, British chemist and physicist c. 1900

Many reasons can be found for placing mathematics and physics at the forefront of the sciences. Since at least the time of the classical philosophers of Ancient Greece, mathematics has been treated as a model or template for all knowledge, including physics, as the mode of thinking towards which all other thinking should aspire. A sign above the entrance to Plato’s Academy in ancient Athens read: ‘Let no-one ignorant of geometry enter here‘.

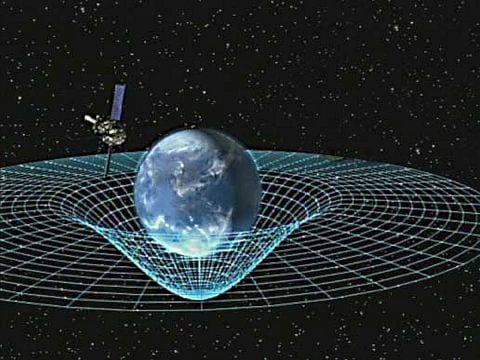

Artist’s impression of Gravity Probe B orbiting the Earth to measure space-time

This is a four-dimensional description of the universe including height, width, length, and time using differential geometry

Differential geometry is the language in which Einstein’s General Theory of Relativity expresses the smooth manifold that is the curvature of space-time – which allows us to position satellites in orbit around the earth. Differential geometry is also used to study gravitational lensing and black holes

The Riemannian geometry of relativity is a non-Euclidian geometry of curved space

Courtesy Wikimedia Commons

Image sourced from NASA at http://www.nasa.gov/mission_pages/gpb/gpb_012.html[/caption]

Mathematics had practical application beyond astronomy, it provided the precision needed to engineer the magnificent monumental architecture we associate with classical civilization. Numerologists like Pythagoras (c. 570–495 BCE) became cult figures for thinking men. The pre-Socratic philosophers had examined the nature of substance, looking for universal properties and fundamental elements, bequeathing to their successors the idea of four foundational elements – Earth, Air, Fire, and Water – in a tradition that continued into the Medieval world, along with Democritus’s idea of matter being composed of tiny indivisible particles of matter called atoms. The study of living organisms, we believe, did not really get started until the time of Aristotle (zoology) and Theophrastus (botany). Only then do we see the emergence of a critical analytic curiosity in organisms themselves rather than just their utilitarian value as food, medicines, and materials. So biology, it seems, arrived as an afterthought in scientific enquiry as expressed so eloquently in Aristotle‘s ‘Invitation to Biology”.

‘It is not good enough to study the stars no matter how perfect they may be. Rather we must also study the humblest creatures even if they seem repugnant to us. And that is because all animals have something of the good, something of the divine, something of the beautiful’ … ‘inherent in each of them there is something natural and beautiful. Nothing is accidental in the works of nature: everything is, absolutely, for the sake of something else. The purpose for which each has come together, or come into being, deserves its place among what is beautiful’Aristotle – De Partibus Animalium (The Parts of Animals) – 645 a15

The universality of mathematics

One feature of the 17th century Scientific Revolution was the unification by Kepler, Newton, and others of subjects like optics and astronomy with physics to yield what are sometimes referred to as the ‘mathematical’ or ‘exact’ sciences. These approximate the exactness and precision of mathematics. Philosophers from Descartes, Leibniz, and Kant to Bertrand Russell and the logical positivists have regarded these subjects as paradigms of rational and objective knowledge because they are quantitative investigations of the physical causes of natural phenomena using rigorous hypothesis testing to yield precisely quantifiable predictions.

Mathematical knowledge has a unique and appealing beauty: it gives us knowledge that is: certain; incorrigible (it does not undergo revision in the way that empirical facts do); timeless or eternal (we are inclined to think that 2 = 2 = 4 must always be true: it was true before humans occupied the world and it would even be true if no universe existed); and it is necessary (its truths seem to lie outside our world of space and time and yet they can be grasped by our reason, they could not be otherwise). In addition, numbers are not causally interactive.

All this makes mathematical knowledge highly abstract since we are not really sure what it is actually about. The simple answer is of course ‘it is about numbers’, but the concept of number has baffled philosophers from the earliest times. If numbers do not actually exist in space and time and they are causally inert (they are abstract objects) then how can we have any knowledge of them? There is no universally-agreed answer to this question but three broad approaches. Either they are independent abstract objects, or they are in the world, or they are mental constructs. The details need not concern us but if numbers do not depend on experience then perhaps we have some special faculty of numerical perception (say, the intuitive abstract objects of Kant), or we can relate them to set theory, to objects in the world (logicism) or the yare simply mental constructs. Each of these positions has major difficulties and the question still has no universally accepted answer. The fact that mathematics is so abstract means we have every reason to dismiss it as some kind of mental construct, a phantom of our minds. But maths has been applied directly to the world in a practical and economic way that has had an immeasurable impact on human life (see, for example, Gravity Probe B illustrated above). There are the many facts about the material world that were first suggested by mathematics before being empirically proven – for example, the Higgs Boson, gravity waves, the existence of Neptune, and the speed of light.

Because numbers seem to have a special kind of reality (and probably under the influence of the charismatic Pythagoras) Plato postulated his world of Forms, (Plato’s world of forms was a world of timeless truths, of generalities, not to be thought of like a separate place from Earth, like a heaven), it was a realm of ideas that could be accessed and applied by reason. This was a special kind of objective knowledge superior to empirical knowledge which, being derived from experience and sensation, was contingent and corrigible.

But how can we possibly believe in the objectivity of such an abstract realm and, anyway, how could we possibly connect with it?

Aristotle did not believe in Plato’s world of Forms, considering number to exist in the world as a property of objects. But, as philosophers later pointed out, how can number exist in a pair of shoes (one pair or two shoes)? Is the property in such a case 1 or 2? Philosopher Kant believed mathematics to be a form of innate intuition, an expression of our human sense of space and time. Arithmetic expressed, through number, our linear and sequential experience of time, while geometry was a way of representing our sense of space. For Kant then mathematics was an abstraction that came from our heads, it did not exist objectively in the world.

The subjectivity or objectivity of number (whether numbers are real) remains a matter for intense intellectual debate. The impact of mathematics on the world cannot be questioned, and the security we feel as a consequence of its necessity, universality, and certainty have given it a special place in the scientific vision of reality . . . so it is hardly surprising that it has been emulated by other disciplines. In physics we see its universality reflected in the laws of physics.

Modernity has maintained its reverence for the application of mathematics to scientific theories and concepts but with the recognition that maths, at its core, is logical not empirical, it is founded on subjunctive statements (if … then): if X (this may be an axiom) then Y. As philosopher David Hume expressed it, maths is about ‘relations of ideas’ not ‘matters of fact’ … it is not empirical.

Principle 5 –Mathematics was inherited from the ancient world as the most secure form of knowledge. Since mathematics provided certain, necessary, timeless and universal truth it was regarded as the form of knowledge against which the statements all science could be measured, and to which all science should aspire

Smallism

Physics, in investigating the nature of matter, proceeds analytically by breaking it up into ever smaller parts, a process that, over the years, has always found (albeit different) the world’s apparent ‘rock bottom’ material constituents. In 1947 these physical building blocks were electrons, protons and neutrons, later it became quarks and other sub-atomic particles, today we have fermions and bosons. In this way all our explanations of matter have brought us to an end point, what we might indeed call the ‘fundamental reality’ of matter and existence . . . the smallest scientifically acceptable units as described by physics.

Principle 3 – Smallism – physics explains matter by proceeding analytically and experimentally to discover its smallest indivisible constituents, its fundamental particles. These are sometimes regarded as the foundational ingredients of ‘reality’

Fundamentalism

We can refer to our intuition that the small units of physics and chemistry are fundamental to both matter and material explanation as ‘scientific fundamentalism’. From this flows the sense of what has also been called ‘generative atomism’, the belief that, like a child’s Lego set any whole can be built out of its fundamental building blocks. To understand the whole we must start with the parts. Small units, it might seem, somehow have greater scientific credibility; they are more authoritative and reliable; they provide better explanations; they are less complicated and therefore more easily understood and they are objects studied by physics.

Principle 4 – Fundamentalism – is the assumption that all scientific explanation of matter must ultimately reduce to explanation of the smallest known particles of matter and their interactions

In arriving at the smallest or fundamental constituents of matter we have a feeling of finality: being fundamental we might feel that these constituents are in some sense more real than the wholes of which they were a part. But this is clearly some kind of mental trickery, a cognitive illusion. There is nothing more ‘real’ about a fundamental particle than an elephant. Indeed, because we can see, touch, and hear an elephant we might argue that the elephant is more empirically real than an invisible fermion or boson (which has a smaller wavelength than that of light). We regard small thigs as special not because of their mere existence (their ontology or being) but because of their role in analysis and explanation (their significance is epistemological). They are part of our habitual explanation of wholes in terms of their components and the relations between these components. Following Aristotle’s explanatory regress our explanations must therfore bottom-out at the smallest particles we know at any point in history.

Sometimes referred to as ‘ontological reduction’ this principle asserts that no physical object ‘exists’ more or less than any other. Smaller units of matter are no more ‘real’ than larger units of matter, nor are more inclusive or less inclusive units, or even more or less complex units. In terms of existence or reality atoms, rocks, bacteria, and humans are equals.

Principle 5 – All matter exists equally: no physical object ‘exists’ more or less than any other. Smaller units of matter are no more ‘real’ than larger units of matter, nor are more inclusive or less inclusive units, or more complex or less complex units (principle of flat ontology)

Reduction, organization, explanatory power

What is controversial in reductionism and science today is not the matter itself (ontological reduction) – but the nature of its organisation, the relations between its parts (epistemological reduction) – especially the parts of living organisms. We must therefore look for other reasons for our prioritization of one domain of knowledge over another, for the intuition that explanations in one domain are in some way superior (have greater explanatory power) than those in another: why, for example, we might consider it useful to think of biology in terms of physico-chemical processes. Why does scientific fundamentalism have such persuasive power over our general attitude to science. If all matter is ontologically equivalent then it is our cognitive focus that is making a distinction between different domains or scales of existence (the physicochemical, biological, social, psychological and so on). Analysis has explanatory power but this does not make the parts under consideration, either their size or inclusiveness, more ‘real’. On reflection we realise that no sort of matter is more real or fundamental in itself. Matter is just matter: small matter is just smaller than big matter, it does not have properties that make it existentially privileged in any way. So, in terms of material reality or existence (ontology) a bison is just as real as a boson.

When we take an overview of all the sciences is it true that ‘Particle physics is the foundational subject underlying – and in some sense explaining – all the others‘?[1] Could this be simply a comment on the way analysis is a habituated mode of explanation? To investigate the regress of scientific explanation to foundational particle physics we need to look at different kinds of explanation.

Explanatory rock bottom and adequate explanation

We might assume that, of necessity, the explanatory regress passes to ever smaller and ‘more fundamental’ material objects. But this is not inevitable: sometimes one particular kind of explanation is sufficient. Sometimes we feel no need to enter an explanatory regress. One particular answer is adequate.

Here are a couple of everyday examples of explanation. First, if asked ‘Why did the chicken cross the road?’ we could call on answers from scientific specialists such as a chicken biochemist, a neurologist, an endocrinologist, and an animal psychologist. But what if we were told that the chicken was being chased by a fox. This, surely, for most of us, is a satisfying and sufficient answer to our question. We do not need or desire to be told anything else. Does this mean that in this case scientific answers were incorrect or inferior in some way? No, only that their explanations were not the most appropriate for the circumstances under consideration. Statements like ‘polar bears hibernate in winter’, ‘inflation can be managed by adjusting interest rates’, ‘evolution is replication with variation under selection’, or even ‘e = mc2’ appear sufficient in themselves: their veracity may be challenged but we do not think they need reformulating or reducing to improve or clarify what is being expressed.

Practical incoherence

Firstly, there is the logical absurdity of trying to explain all phenomena in terms of the smallest workable scientific particles. What is to be achieved by explaining many biological facts in this way, like the fact that polar bears hibernate in winter? Examples become more ludicrous as we consider wider scientific contexts. How could we possibly explain a rise in interest rates in terms of fundamental physical particles and the laws of physics? What would such an explanation possibly look like? It is not that such a situation is logically impossible. We can imagine a supercomputer of the future that could enumerate the many causal factors at play in such a situation but we simply do not think this way, and nor do we need to. Explaining the causes of an interest rate rise in physicochemical terms would not simplify matters and give greater clarity, it would entail an explanation so complex as to be barely imaginable.

What then constitutes a satisfactory scientific answer to a scientific question?

Principle 6 – The principle of sufficient explanation: explanations are fit for purpose, they do not need to be circular, foundational, or part of an infinite regress

This example demonstrates the multi-causal nature of many occurrences – like car and plane crashes. Questions about cause(s) in such situations are not abandoned because of their complexity since they must achieve a resolution in a court of law. In many instances, in spite of the apparent complexity, rulings are readily made.

Our intuitive desire for foundational explanations creates several difficulties.

The primacy of analysis – generative atomism

If someone asks you ‘What is a heart and how does it work?’ we might answer analytically by treating the heart as a whole and explaining the parts and how they interact. Alternatively we might answer synthetically by treating the heart as a part and explaing how it interacts with other organs to contribute to the functioning of the body as a whole.

Much of science proceeds by explanatory analysis, breaking down physical entities into their constituent parts. But here too Aristotle’s dictum applies as we are inclined to proceed in a regress to ever smaller parts until we feel we have reached rock bottom, the world’s fundamental particles. There has, in the course of history, been a variable rock bottom. If the future continues as the past then there is nothing absolute, necessary, or certain about the particles that make up rock bottom. Democritus defined atoms as indivisible particles but physics has split the atom again an again with today perhaps fermions and bosons approximating the foundational bricks out of which the universe is constructed.

Scope – universality of physical constants

Physics approaches mathematics in the (near) universality of of its physical constants. Since it has a universal scope it also has an all-embracing character that is not shared by other sciences: its principles, theories, and laws are of such generality that they encompass all matter excepts under the most extreme situations. A falling stone and a falling monkey both conform to Newton’s laws of gravitational attraction. Physics tries to explain the world at not only the smallest scale as the behaviour of fundamental particles but also at the widest scale as constants or constraints that apply universally to all matter.

The foundations of science are generally taken to lie in mathematics and physics because their basic assumptions have universal application in two important ways: firstly, physics works with the stuff of the universe at its extremes – from the smallest particles to the cosmos in its entirety; secondly

Principle 7 – Physics combines with mathematics to formulate constants and constraints that apply to not only the smallest known particles but to the universe as a whole and therfore its scope is wider than that of other sciences

The challenge to scientific fundamentalism

So what have we decided constitutes something being more scientific or less scientific?

Arguing that that one is ‘more scientific’ than another requires an extended justification. So far we might claim, for example, that physics encompasses all matter, while biology only deals with living matter. Physics deals with generalities and regularities that apply throughout the universe while biology only deals with the subset of generalities that relate to living orgnisms. Whatever principles and generalities we can establish in relation to life appear to lack the scope and reliability that we see in physical laws.

Because both a rock and an elephant conform to the same effects of gravity does not automatically mean that physics is more fundamental.

Adding value

We might intuitively feel that the objects of an explanation (the explanans) are more fundamental than the object being explained (the explanandum)

True science, special science, hard and soft science

Has this account so far established a clear distinction between fundamental or foundational science and other science? Can we distinguish between hard and soft sciences, or indeed between science and non-science – or are such distinctions just a matter of semantics? The term ‘special sciences’ is generally used to denote those sciences dealing with a restricted class of objects as, say, biology (living organisms), and psychology (minds) while physics, in contrast, is kown as ‘general science’. Reductionism would maintain that the special sciences are, in principle, reducible to physics or entities that may be described by physics.

Can we establish a clear benchmark using criteria of certainty, necessity, universality, corrigibility (falsifiability), certainty, or predictive capacity by which to rank in order the following areas of study: mathematics, physics, astrology, genetics, biology, psychoanalysis, psychology, history, political science, sociology, and economics. Would this establish a reliable table of scientific merit? Are such ranking criteria appropriate or should other factors be considered and, if so, what would they be?

In spite of many historical attempts, the philosophy of science has failed to establish uncontroversial necessary and sufficient conditions that would satisfy a definition of ‘science’ (see Science and reason). At present it appears that what we call science is, more or less, our most rigorous application of reason to an assemblage of theories, principles, and practices that share a family resemblance as a means of enquiry. It is this that has proved our most effective way of organising the knowledge we use to understand, explain, and manage the natural world.

In at least a practical and intellectual sense the special sciences are autonomous, their explanations, methodologies, terms, and objects of study are perceived as self-sufficient without any requirement or benefits flowing from translation to another scale or ‘lower level’ in spite of assumptions about successful reductions in the past and the causal completeness of physics.

Fundamental can be ontic (that out of which everything is made – microphysics) or epistemic (that to which everything conforms).

When we reduce are we suggesting a relation of identity between the reduced and reducing entities that justifies the elimination of the reduced entity: or are we merely referring to differe3nt modes of describing the same thing?

Method & subject-matter

Abstraction-reduction

It is a characteristic of explanation that it abstracts: it considers one particular aspect of the natural world to the exclusion of a more general context. In general our focus is on the explanation, not the context, the context being assumed or taken for granted. When a biologist gives an explanation of the way a heart pumps blood, it is assumed that the laws of physics are in operation – this does not have to be stated. Thus all explanations we provide have two key characteristics: firstly, abstraction – that is, they abstract from a greater whole, they focus on a particular situation or object while ignoring the context; secondly, they enter a potential analytic or synthetic regress. Explanations thus resemble our perceptive and cognitive focus by paying attention to a particular set of circumstances (foreground) while ignoring the wider context (background). In providing an explanation there is a kind of unspoken rider … something along the lines … ‘assuming the uniformity of nature, and other things being equal (ceteris paribus)’.

Principle 8 – Explanations abstract information from a wider context

(It is a characteristic of explanations that they tend to abstract (reduce) from the whole (the wider context). An explanation considers one aspect to the exclusion of others. An explanation is regarded as satisfactory, or ‘complete’, when it is sufficient for its purpose; it cannot account for the full context – which is taken for granted. When a biologist explains the way a heart pumps blood, it is assumed that the laws of physics are in operation – this does not have to be stated, in addition, to make the explanation complete.

Though most explanations ignore the wider context, they have the potential to enter either an analytic or synthetic regress. That is, the explanation can procede by progressive reduction and simplification (analysis) or it can consider an ever widening context (synthesis).

Explanations resemble our perceptive and cognitive focus by paying attention to a particular set of circumstances (foreground) while ignoring the wider context (background). In providing any explanation there is an unspoken rider . . . something along the lines . . . ‘assuming the uniformity of nature, and other things being equal (ceteris paribus)’.)

Proximate & ultimate explanation

Is sex for recreation or procreation?

A proximate explanation is the explanation that is closest to the event that is to be explained while an ultimate explanation is a more distant reason. In behaviour a proximate cause is the immediate trigger for that behaviour: the proximate cause for running might be a gun shot, the ultimate cause being survival. Biology itself divides in its approach to proximal and ultimate causes. Ultimate causes usually relate to evolution and adaptation and therefore function, answering the question of why selection favoured that trait – and the answers tend to be teleological. Proximal causes deal with day-to-day situations and immediate causation. Proximate and ultimate explanations are complementary, they are not in opposition with one being better or more explanatory than the other, both have their place. This is a trap for the unwary since proximate answers can be mistakenly given to ultimate questions.

So, one possibility is that there is no privileged perspective that entails all others, each is equally valid and the explanation that is most appropriate will depend on the particular circumstances. In all this we are abstracting and studying certain factors while ignoring others. When we study the genetic code we do not consider it appropriate to think about electrons and quantum mechanics: when we study the heart we do not worry about gravity or consult the periodic table.

Principle 9 – Satisfactory explanations generally depend, not on the size of the units under consideration or the inclusiveness of the frame of reference, but the plausibility, effectiveness, or utility of the answer in relation to the question posed.

So, sex is for both procreation and pleasure.

(Is the explanation contingent on our human interests and limitations or is it a full causal account?)

4. Unity of science, spatiotemporal boundaries, scope & scale

As science progressed it provided increasingly elegant summations of knowledge about the physical world. Apparently disparate phenomena were united under common laws that could be expressed using mathematical equations: the motion of the planets, the behaviour of fluids, electricity, and light. The integration of physics and mathematics had such explanatory and predictive power in relation to so many phenomena that there seemed no end to what they might achieve. Gravity was a universal force that treated falling rocks and falling monkeys with absolute equality. Physics embraced space and time, matter and energy – and that was mighty close to everything. Its explanatory breadth and predictive power was, and still is, thoroughly demonstrated through its spin-off technology. Today our GPS systems integrate space flight and complex electronics with relativity theory and quantum physics to provide flat earth maps on our car navigation systems. There was a vision of physics as a fundamental discipline incorporating all other knowledge. Physics was universal in scope and scale while other scientific disciplines dealt with only sub-sets of the physics enterprise. So, for example, physics encompassed all matter, biology only living matter, animal behaviour all sentient living matter, sociology humans as they interact in groups, anthropology human beings, human psychology human brains and behaviour. This characterization of science presents us with a metaphysical monism: there is one scientific truth for one reality based on one set of underlying principles (scientific laws). This vision is generally referred to as the ‘unity of science’.

Principle 10 – Scientific fundamentalism is a metaphysical monism: there is one scientific truth for one reality based on one set of underlying principles (scientific laws). This monistic vision is generally referred to as the ‘Unity of Science’

All the convoluted complication of complexity – the mess of multiplicity of objects – their properties, relations, and aggregations – can be simplified and reduced by analysis as the adoption of a philosophy approximating monism as a description of the many in terms of the few. Scientifically we do this by means of the elementary particle, generalization to principles and laws, and systematization.

For some physicists there is a goal like a ‘unified field theory’: when quantum mechanics is reconciled with relativity then our account of the physical world will be complete.

Principle 11 – The unity of science (metaphysical monism) – there is one scientific truth for one reality based on one set of underlying principles (scientific laws)

Does this universal character of physics give some kind of precedence to physics: does it make physics more ‘fundamental’?

Principle 12 – Because physics is broad in scope it seems to encompass or absorb other disciplines of more limited scope.

Citations

[1] Ellis 2005

[2] see Naomi Thompson and Fictionalism about grounding https://www.youtube.com/watch?v=yMO64-21aik

[3] Fictionalism can apply across many domains. So, for example, we can be fictionalist about numbers (i.e. numbers have no referents, but they are useful) and morality (there is no objective right or wrong, but the notion of right and wrong, good and bad serve an important role in human life)

References

Ellis, G.F.R. 2005. Physics, complexity and causality. Nature 435: 743

Physical reductionism is possible but explanatory reductionism is not.

Supervenience of th emental on the neuralogical was an idea introduced by Donald Davidson as a dependence relationship.

The article on reality and representation also discussed the way our minds, that is, our cognition based on the objects of our perception, attempt to put order into the confusing complexity of mental categories that make up reality. Working on the scientific image can improve the categories we use to describe the nature of reality but it does not give is an overall structure. We give structure to reality by applying metaphors that generally work well for us in daily life – by distinguishing between: what is bigger and what is smaller; what is contained in or is a part of something else; what is simple and what is tied to other factors in a complex relationship; and by what can be ranked or valued in relation to something else.

It was also noted that when we describe the physical world we do so from different perspectives: we can give different accounts and explanations of the same physical state of affairs. So, for example, we can give physical, chemical, biological, psychological, sociological accounts of what is the same physical situation.

The question than arises as to whether any one particular mode of explanation and description should have priority over others and, if so, for what reason? That is the topic of this article.

The problem of reduction in science brings together a web of ideas, beliefs and assumptions about the world. To help connect some of the threads of this story I have organized the discussion into a set of principles that can be used for easy reference.

So far we have considered cognitive segregation, the way our minds divide the world into meaningful categories of understanding, our percepts and concepts, and the way that our cognition allows us to, as it were, look beyond the world of our biologically-given human perception (the manifest image) to a less anthropocentric world that allows us to not only investigate the way other sentient organisms perceive the world but to investigate the composition and operation of the external world itself.

What about the world of solid objects around us? Our curiosity about substance stretches back to at least Democritus’s and his world of fundamental indivisible particles called atoms. This was not an observed world but a postulated metaphorical world. By the 1940s it was thought that we had reached the truly fundamental constituents of matter when atoms were split into protons, electrons and neutrons. The metaphor was still of ‘particles’ like billiard balls rotating in a solar-system-like way around a nucleus. The world of particles would be transformed into one of forces made up of fields. Since the 1940s the metaphor has been changed again. Particles have been replaced by waves: so the world outside our minds is perhaps best characterized as space which consists of interacting vibrating fields. The Higgs field explains where ‘particles’ get their masses.

Humans are, nevertheless, special. Our unique mode of representation and comprehension (our reasoning faculty and the capacity to communicate and store information using symbolic languages) allows us to look beyond the world of direct experience (the manifest image) towards the way the world actually is (the scientific image).

This is amply demonstrated by the time-honoured deference to ‘hard’ sciences like maths, physics and chemistry when compared to a ‘soft’ science like biology.

A force is due to a field and a field is something that has energy and a value in space and time like a magnetic field, temperature, and wind speed.

With increasing complexity comes greater difficulty in predicting outcomes. As a consequence biological principles and patterns seem to lack the precision and universality that we see in the laws of physics. Biological principles are derived from highly complex organisational and causal networks and open systems with a vast number of variables in which no two organisms are structurally identical. We might think that physics in accounting for the behaviour of the planets in the solar system has achieved much but the impressive and universal predictive laws of celestial mechanics can be derived relatively simply from the positions and momenta of planetary bodies in a relatively closed system. The number of variables is few.

Because the physical world ‘contains’ living organisms as a part, does it follow that the the universal laws of physics ‘contain’ those of biology it is tempting to assume that its scope is universal and that other realms of knowledge are simply sub-sets of physics. For example, biology is spatiotemporally bounded,[8] it takes the laws of physics as given; it is answering different questions in a different realm of thought. We could conceive replacing biology with the physical sciences thus making biology part of a system of strict universal laws but even if that were possible ‘we would not have explained the phenomena of biology. We would have rendered them invisible’.[9] Some laws apply over the whole range of scales.

Perhaps an explanation at one level does not require an explanation at another – or, at least, not at a level that is distant from it? We might explain chemistry in terms of physics but biology is conceptually more distant. We can feel cognitive focus at work here … atomic numbers, Maxwell’s equations, or the theory of relativity are not directly relevant when we work within the biological domain, or at least they are taken for granted as background. Hence the absurdity of explaining sociological phenomena in terms of physics and chemistry.

For example, since the large is explained analytically in terms of the small, we intuitively place greater value on the small giving it ontological precedence simply by virtue of size (but see Principle 3). Biologists no longer claim, as they once did, that living matter is quite different in kind from inanimate matter but this is a matter of perspective (all matter is physical matter but not all matter is biological matter). Many people once believed that the mind was inhabited by a spirit or soul and that, in a similar way, bodies were also inhabited by some special kind of spirit or vital force (elan vitale, entelechy). This general view, known as vitalism, is now discredited. The existence of such forces is not only implausible but, since they cannot be detected and studied, are of no explanatory value. They are best ignored.

Physics has its own problems with scale as it wrestles to reconcile the behaviour of matter at the small distances of quantum physics and the vast scale of cosmology, the break-down of laws in at the Big Bang or the singularities of Black Holes. Whether we look at the patterns in nature described by Newton, Einstein, Joule, Faraday, Maxwell or the various laws of thermodynamics the link to biology frequently seems tenuous. Of course the physics of matter is important to know about when studying nerves and macromolecules, but much of this is incidental to many biological questions.

In its most basic form foudationalism regards matter as the only reality but even the mechanistic philosophers of the Scientific Revolution recognised that this matter was in motion and today we realize the sigificance of not just matter but its mode of organization.

A flat ontology removes the necessity for the grounding of an object in something other than itself. There is no need for the Principle of Sufficient Reason. Explanations and reasons do not provide underlying truth or get closer to reality, they simply express or ,reduce, one scale or mode of existence in terms of another.